Transformer application in JUNO

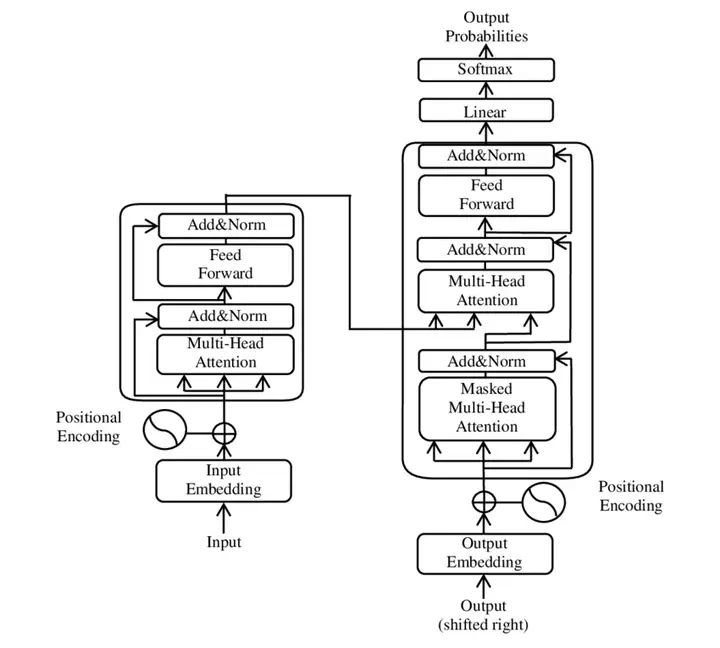

The Transformer is a deep learning model that underpins GPT-4 and PartT etc. GPT-4 is a large language models that has demonstrated outstanding ability to understand and follow instructions and thus shows strong artificial intelligiance. ParT (Particle Transformer) is an architecture used in jet tagging by ATLAS/CMS @ LHC and developed by CERN scientist Qu Huilin et al.

Considering its achievement in strong artificial intelligance and in particle physics on collider, it is expected it would benefit the neutrino physics, too, considering similar nature of detector being cameras between ATLAS/CMS and JUNO.

In JUNO, the particle energy and direction reconstruction, the particle type identification, signal-background separation, and pile-up separations are of fundamental importance in data analysis of several physics targets, including solar neutrinos, atmospheric neutrinos, geo-neutrinos, and supernovae neutrinos. Several groups at IHEP has demonstrated that Transformer has strong ability to handle complex tasks and outperform traditional machine learning methods.

It would be a challenging yet pioneering job to study the advantage of transformer application in JUNO analysis, and it is urgent and essential to develop a set of calibration and evaluation criteria for machine learning algorithms.

If you are interested in the project, we have open positions for research assistants, phd students, and postdocs. Please refer to the JOB for more details.